Meet the Team

Austin Lee, Ph.D.

Lead Researcher

Jake Liang, Ph.D.

Researcher

Sookyung Cho, Ph.D.

Researcher

Humanoid robot

Nao Evolution V6

by Softbank Robotics

Research

Fear of AI & building trust

Are humans afraid of AI? Our study reports that a significant portion of the US population, around 26%, reports experiencing heightened levels of fear toward AI and robots. Moreover, disadvantaged groups, including those who differ in gender, age, ethnicity, education, and income, are more likely to experience such fears. Our research shows that such fears are linked to other forms of anxiety, such as loneliness and concerns over job security. However, our team believes that trust in AI can be built through effective communication strategies. Our study proposes that trust development is a gradual process that requires careful calibration to reach optimal levels. We recommend that AI developers prioritize establishing open lines of communication with users and consider the specific needs and concerns of different populations to build trust in AI technologies.

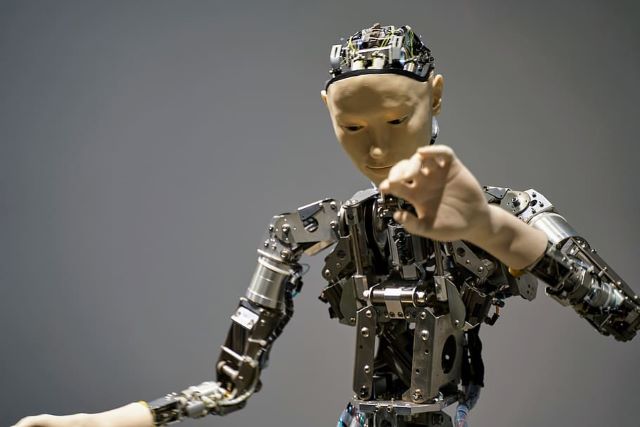

AI can persuade humans

Although many people express concerns about trusting AI, our research has shown that AI and robots can effectively persuade humans through various communication techniques. We believe that this persuasive potential of AI can be leveraged to promote healthy behaviors, such as vaccination, as well as prosocial behaviors, such as helping those in need. Furthermore, our team has found that communication techniques can also be employed to build trust in AI and foster positive interaction outcomes. Our studies suggest that by utilizing effective communication strategies, AI developers can not only increase the persuasive power of their technologies but also promote trust and enhance the overall user experience.

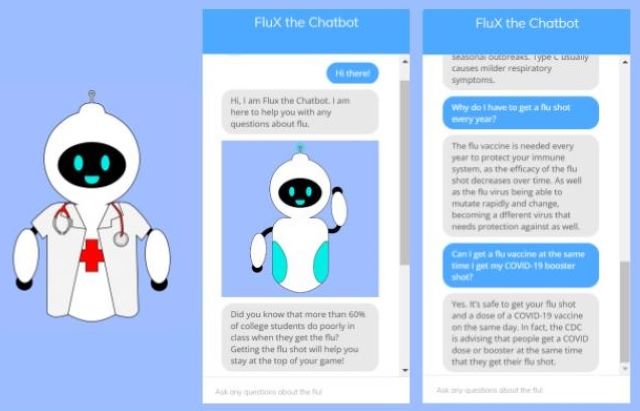

Chatbot for flu vaccine persuasion

As part of our research into the intersection of AI and health communication, our team has launched a new project featuring a chatbot named Dr. Flux. Using the latest version of AIML (AI Markup Language), Dr. Flux engages college students in conversations about the importance of getting a yearly flu shot. Unlike many other chatbots, Dr. Flux is highly interactive, delivering natural and persuasive dialogue through a friendly animated character that can be accessed on mobile devices. Our team has carefully designed Dr. Flux to not only provide valuable information but also employ scientifically validated persuasion techniques to encourage users to take action and get vaccinated. Our hope is that this project will not only improve public health but also advance our understanding of how AI can be used to facilitate effective health communication.

Technology use in health communication

Alongside our research on the intersection of AI and health communication, our team is also dedicated to exploring diverse topics in health communication, with a particular emphasis on technology use. Our research has included studies on how misinformation on news and social media platforms has hindered the adoption of COVID-19 vaccines, as well as on the potential of technology to facilitate communication between patients and physicians. Additionally, our team has investigated how best to communicate workplace health promotion programs to employees. By addressing a range of issues related to health communication and technology, our team hopes to make a meaningful contribution to improving public health outcomes and advancing our understanding of the role of technology in health communication.

Teaching

Multidisciplinary courses on AI & robotics

Our team is committed to advancing education in the field of AI and robotics, and has developed a range of courses to teach college students about these cutting-edge technologies. Our courses cover topics such as AI, machine learning algorithms, natural language processing, and humanoid robot programming, and provide students with hands-on experience designing and implementing chatbots and humanoid robot applications. We are proud to have been recognized for our efforts in this area, including receiving the Pedagogical Innovations Award from Chapman University. By helping students gain knowledge and experience in AI and robotics, we hope to prepare the next generation of innovators and problem-solvers in this rapidly-evolving field.

Media Coverage

From factories, offices and medical centers to our homes, cars and even our local coffee shops, robots and artificial intelligence are playing an increasingly greater role in how we work, live and play...

Cincinnati Public Radio

October 3, 2017

As social robots permeate wide segments of our daily lives, they may become capable of influencing our attitudes, beliefs, and behaviors. In other words, robots may be able to persuade us, and this is already happening...

Cincinnati Business Courier

March 24, 2017

It’s no secret that the College of Informatics has the most technologically advanced building on campus — Griffin Hall. But Griffin Hall is also home to Pineapple. Dr. Lee and Dr. Liang are using Pineapple to conduct studies on how humans interact socially when a robot is present...

Inside NKU

October, 2015

Between the studies on digital media production and computer sciences, Griffin Hall is home to some impressive technology. You might start seeing a new, digital face wandering the halls of the College of Informatics. That digital face is known as Pineapple, and it will be used for the next three years for research into social robotics...

The Northerner

October 19, 2015

Awards & Grants

Awards

Top Paper Award, National Communication Association, Communication and the Future Division (Lee & Liang, 2017)

Valerie Scudder Award: Jake Liang, Chapman University (2017)

Excellence in Research, Scholarship, and Creative Activity: Austin Lee, Northern Kentucky University (2017)

Faculty Research Excellence Award: Jake Liang, Chapman University (2016)

Top Paper Awards, International Communication Association, Instructional and Developmental Communication Division (Liang, 2015)

Grants

Pedagogical Innovations Grant: Austin Lee, Chapman University (2019)

Faculty Summer Fellowship: Austin Lee, Northern Kentucky University (2015)

Faculty Project Grant: Austin Lee, Northern Kentucky University (2015)

Research Grant: Jake Liang, Chapman University (2015)

Seed Grant: Austin Lee, Northern Kentucky University (2015)

Publications & Conference Presentations

Publications

Lee, S. A., & Cho, S. (2024). Interplay of gender and anthropomorphism in shaping perceptions of autonomous vehicles. In Adjunct Proceedings of the 16th International ACM Conference on Automotive User Interfaces and Interactive Vehicular Applications (AutomotiveUI '24). Association for Computing Machinery.

Cho, S., Nguyen, L. L., Wen, Y., Goto, Y., & Lee, S. A. (2022). Visualizing crocheting overlay. In N. Maiden & C. Sas (Eds.), Proceedings of the 14th Creativity and Cognition (pp. 406-419). Association for Computing Machinery.

Lee, S. A., & Liang, Y. (2019). A communication model of human-robot trust development for inclusive education. In J. Knox, Y. Wang, & M. S. Gallagher (Eds.), Speculative futures for artificial intelligence and educational inclusion (pp. 101-115). Springer Nature.

Lee, S. A., & Liang, Y. (2019). Robotic foot-in-the-door: Using sequential-request persuasive strategies in human-robot interaction. Computers in Human Behavior, 90, 351-356.

Lee, S. A., & Liang, Y. (2018). Theorizing verbally persuasive robots. In A. L. Guzman (Ed.), Human-machine communication: Rethinking communication, technology, and ourselves (pp. 119-143). Peter Lang.

Liang, Y., & Lee, S. A. (2017). Fear of autonomous robots: Evidence from national representative data with probability sampling. International Journal of Social Robotics, 9, 379-384.

Liang, Y., & Lee, S. A. (2016). Advancing the strategic messages affecting robot trust effect: The dynamic of user- and robot-generated content on human-robot trust and interaction outcomes. Cyberpsychology, Behavior, and Social Networking, 19, 538-544.

Lee, S. A., & Liang, Y. (2016). The role of reciprocity in verbally persuasive robots. Cyberpsychology, Behavior, and Social Networking, 19, 524-527.

Lee. S. A., & Liang, Y. (2015). Reciprocity in computer-human interaction: Source-based, norm-based, and affect-based explanations. Cyberpsychology, Behavior, and Social Networking, 18, 234-240.

Liang, Y., Lee, S. A., & Jang, J. (2013). Mindlessness and gaining compliance in computer-human interaction. Computers in Human Behavior, 29, 1572-1579.

Conference Presentations

Lee, S. A., & Kee, K. F. (2024). Uncanny, artificial, but curious: A mixed methods study on responses to sex robots. Paper presented at the 33rd IEEE International Conference on Robot and Human Interactive Communication (RO-MAN 2024). Pasadena, CA.

Wozniak, T., Lee, S. A., & Miller-Day, M. (2021). COVID-19 misinformation beliefs: Why perceived credibility of health communication information sources matter. Paper presented at the International Communication Association. Online.

Wozniak, T., Miller-Day, M., & Lee, S. A. (2021). Health misinformation and individual factors affecting compliance with COVID-19 health behavior recommendations. Paper presented at the Eastern Communication Association. Online.

Lee, S. A. (2019). Human-robot proxemics and compliance gaining. Paper presented at the 69th annual convention of the International Communication Association, Washington, DC.

Liang, Y., Lee, S. A., & Kee, K. F. (2019). The adoption of collaborative robots toward ubiquitous diffusion: A research agenda. Paper presented at the 14th ACM/IEEE International Conference on Human-Robot Interaction (HRI 2019) Workshop. Daegu, South Korea.

Lee, S. A., Appelman, A., & Waldridge, Z. J. (2018). Robot message credibility. Paper presented at the 68th annual convention of the International Communication Association, Prague, Czech.

Lee, S. A., & Liang, Y. (2017). Theorizing verbally persuasive robots. Paper presented at the 103rd annual convention of the National Communication Association, Dallas, TX.

Lee, S. A., Liang, Y., & Thompson, A. M. (2017). Robotic foot-in-the-door: Using sequential-request persuasive strategies in human-robot interaction. Paper presented at the 67th annual convention of the International Communication Association, San Diego, CA.

Liang, Y., & Lee, S. A. (2016). Employing user-generated content to enhance human-robot interaction in a human-robot trust game. In Proceedings of the 11th ACM/IEEE International Conference on Human-Robot Interaction (HRI 2016). Christchurch, New Zealand.

Lee, S. A., Liang, Y., & Cho, S. (2016). Effects of anthropomorphism and reciprocity in persuasive computer agents. Paper presented at the at the 102nd annual convention of the National Communication Association, Philadelphia, PA.

Cho, S., Lee, S. A., & Liang, Y. (2016). Using anthropomorphic agents for persuasion. Paper presented at the 66th annual convention of the International Communication Association, Fukuoka, Japan.